Responsible AI: key concepts and best practices

Table of contents

In response to the rapid expansion of artificial intelligence (AI), the concept of Responsible AI has emerged as a solution to critical issues of security, reliability, and ethics. But how can we balance technological innovation with risk management? To delve deeper into this topic and take concrete action, BeTomorrow’s teams are trained in best practices for responsible AI, ensuring the implementation of ethical and controlled solutions in every project.

In this article, we share practical and straightforward solutions to adhere to the principles of Responsible AI.

What is Responsible AI ?

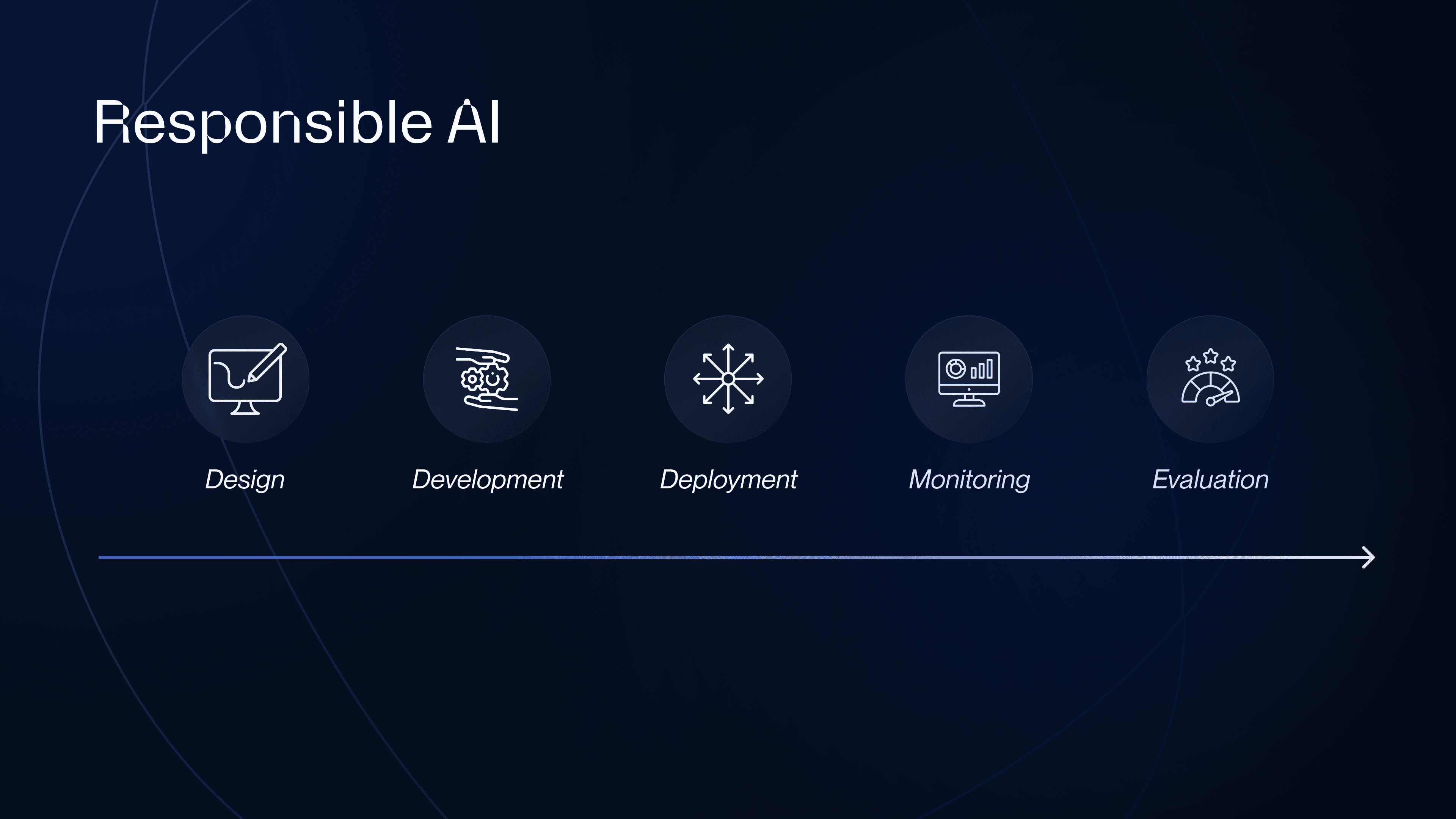

Responsible AI refers to a set of practices and principles aimed at ensuring that artificial intelligence (AI) systems are transparent, reliable, and capable of limiting potential risks and negative consequences. These principles must be applied throughout the entire AI application lifecycle, from the initial design phase to development, deployment, monitoring, and evaluation.

To leverage AI responsibly, companies must ensure that their systems:

Are fully transparent and accountable, with oversight and control mechanisms in place.

Are governed by an executive team responsible for the Responsible AI strategy.

Are developed by teams trained in responsible AI principles and practices.

Are clearly documented to make algorithmic outputs easily explainable.

Which types of AI require Responsible AI?

Responsible AI applies to all types of artificial intelligence systems, whether based on traditional machine learning models or advanced generative models.

Traditional AI

Traditional machine learning models perform specific tasks based on provided training data. This includes tasks such as prediction or classification, such as sentiment analysis and image recognition. Each model is limited to a single task and requires a representative and balanced dataset.

Examples: recommendation engines, voice assistants, fraud detection systems.

Generative AI

Generative AI relies on foundation models (FMs), such as language models pre-trained on vast amounts of data. These versatile models can generate content (text, code, images, etc.) in response to user instructions.

Examples: chatbots, automated code generation, and image creation.

The potential of foundation models is immense, transforming various sectors:

Creativity: production of new content (texts, images, videos, music).

Productivity: automation of repetitive tasks and increased efficiency.

Connectivity: creation of new forms of interaction with customers and optimization of collaboration.

Responsible AI: common challenges in traditional and generative AI

Model accuracy

The primary challenge developers face in AI applications is accuracy. Both traditional and generative AI applications rely on models trained with datasets. These models can only make predictions or generate content based on the data used during training. If training is insufficient, the results will be inaccurate. Addressing bias and variance issues in your model is therefore essential.

Bias

Bias is one of the greatest challenges for AI system developers. Bias in a model means that important features of the dataset are omitted, often because the data is oversimplified. Bias is measured by the difference between the model’s expected predictions and the actual values being predicted.

If this difference is small, the model has low bias.

If the difference is large, the model has high bias.

A high-bias model is underfitted, meaning it fails to capture enough variability in the data features and, as a result, performs poorly on the training data.

Example of bias in facial recognition: A facial recognition system trained primarily on images of individuals with light skin may perform significantly worse when recognizing individuals with darker skin. This occurs due to biased training data in which darker-skinned individuals are underrepresented.

A 2018 study revealed that systems developed by major companies showed higher error rates for women with darker skin tones. For instance, Microsoft’s software exhibited an error rate of 20.8% for this demographic, compared to 0% for light-skinned men.

Variance

Variance represents another challenge for developers. It refers to the model’s sensitivity to fluctuations or noise in the training data. A problem arises when the model treats these fluctuations as important features. High variance indicates that the model has become so familiar with the training data that it can make very precise predictions by capturing all data features, including noise.

However, when new data is introduced, the model’s accuracy decreases, as this new data may contain different characteristics not accounted for during training. This leads to the issue of overfitting. An overfitted model performs well on the training data but fails during evaluation on new data because it has "memorized" the seen data rather than generalizing to unseen examples.

Bias-variance tradeoff

The bias-variance trade-off involves finding the right balance between bias and variance when optimizing your model. The objective is to train a model that is neither underfitted nor overfitted by minimizing both bias and variance for a given dataset.

Underfitted (high bias, low variance): The regression line is overly simplistic and fails to capture data features.

Overfitted (low bias, high variance): The regression curve fits the data too perfectly, capturing even noise, which leads to poor performance on new data.

Balanced (low bias, low variance): The regression curve captures enough data features without being affected by noise.

Here are some methods to reduce bias and variance errors:

Continuous Monitoring: deploying an AI model does not guarantee stable performance over time. A model can drift and produce unexpected or even harmful results if its behavior is not continuously monitored. For example, consider a financial credit scoring model initially trained on stable data. If economic conditions change (e.g., inflation, new banking policies) without updating the training data, the algorithm may unintentionally discriminate against certain categories of applicants. This phenomenon is known as "data drift," where the statistical distribution of input data changes relative to the training dataset, leading to bias and errors.

Cross-Validation: a technique for evaluating machine learning models by training several models on data subsets and testing them on complementary subsets. This helps detect overfitting.

Increasing Data Volume: adding more samples to broaden the model’s learning scope.

Regularization: applying regularization, a method that penalizes extreme weights to prevent overfitting in linear models.

Simpler Models: using simpler model architectures to reduce overfitting. Conversely, an underfitted model may be overly simplified.

Dimensionality Reduction (PCA): using dimensionality reduction techniques to decrease the number of features in a dataset while retaining maximum information.

Early Stopping: sometimes, reducing training time yields better results. Iterative tuning of training parameters (epochs, early stopping) is necessary to balance neural network performance.

What are the specific challenges of Generative AI?

While generative AI offers unique benefits, it also presents specific challenges. These include toxicity, hallucinations, intellectual property concerns, plagiarism, and fraud.

Toxicity

Toxicity refers to the generation of offensive, disturbing, or inappropriate content. Defining and circumscribing toxicity is complex, as subjectivity plays a significant role in the perception of what is considered toxic. The line between moderating harmful content and censorship is often blurred and dependent on cultural context. For instance, should quotes perceived as offensive out of context be censored, even if they are explicitly labeled as such? From a technical standpoint, detecting offensive content that is subtly or indirectly formulated without using explicit provocative language is challenging.

Hallucinations

Hallucinations refer to statements that seem plausible but are factually incorrect. Given the probabilistic nature of large language models (LLMs) based on word distribution sampling, these errors are particularly common in factual or scientific contexts. A typical case involves generating nonexistent scientific citations. For example, when asked, "Cite articles by this author," the model may produce realistic-looking but fictional references with plausible titles and co-authors.

Intellectual property

Early generations of LLMs raised concerns regarding intellectual property compliance, as they could reproduce text or code passages identical to their training data, posing confidentiality and copyright issues. Although improvements have reduced such occurrences, generating nuanced derivative content remains problematic. For instance, a generative image model might create a piece of a "cat on a skateboard" in Andy Warhol's style. If the work convincingly imitates Warhol's aesthetic based on training data, it may raise legal objections.

Plagiarism and fraud

The creative capabilities of generative AI raise concerns about its use in academic and professional contexts. It can be used to write essays, application writing samples, or graded assignments, thereby facilitating cheating. The debate remains ongoing, with some institutions strictly prohibiting the use of generative AI, while others advocate for adapting educational practices. The central challenge lies in verifying the human origin of content.

Workforce disruption

The ability of generative AI to produce high-quality texts and images, pass standardized tests, draft articles, or enhance the grammar of existing documents raises concerns about its disruptive potential in certain professions. Although the idea of widespread replacement may be premature, the transformative impact on the nature of work is undeniable, as many tasks previously considered non-automatable can now be performed by AI systems.

The 8 fundamental principles of Responsible AI

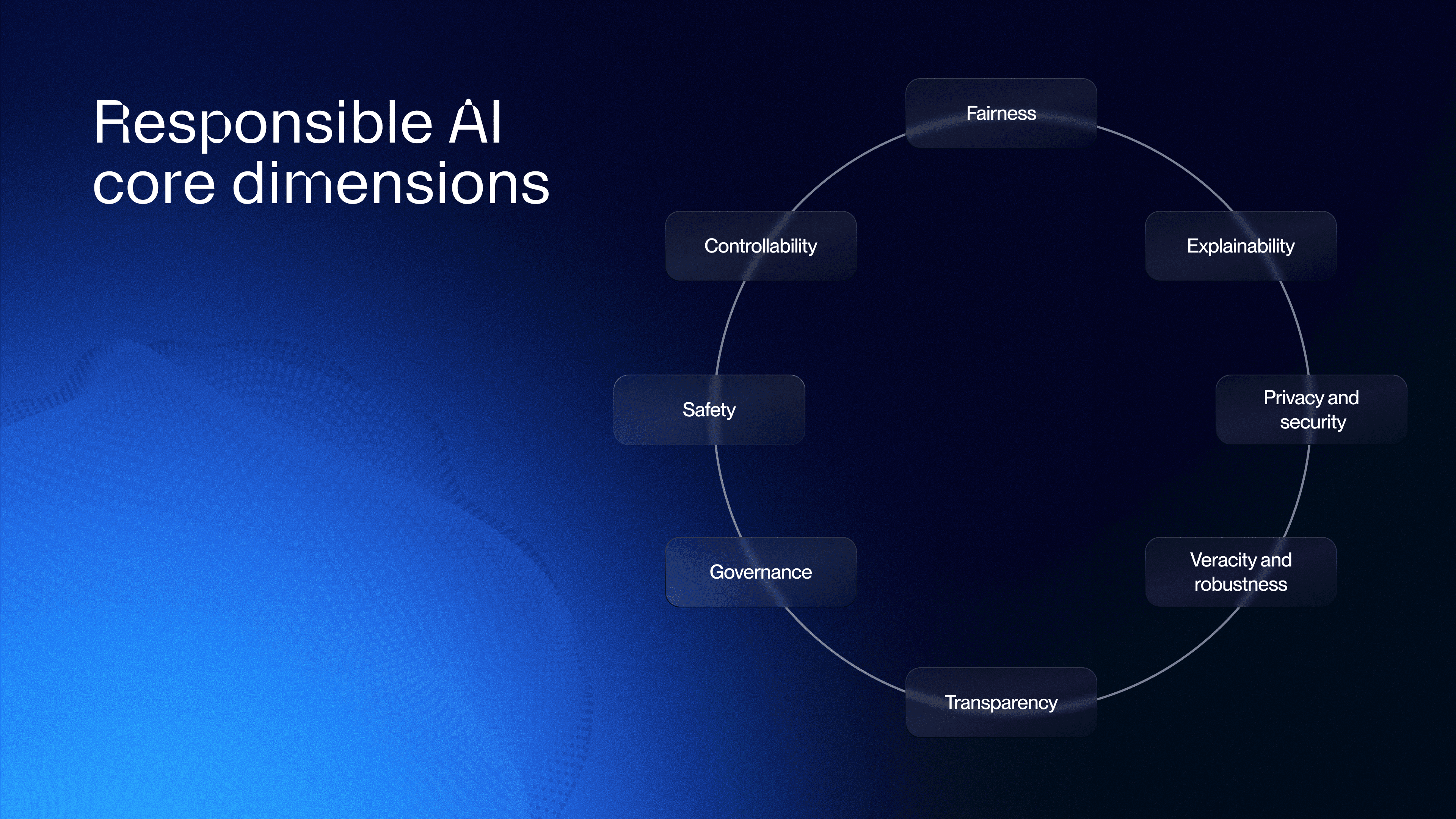

The key principles of responsible AI encompass fairness, explainability, privacy and security, robustness, governance, transparency, safety, and controllability. None of these principles can be considered in isolation; they are all interconnected in the design of truly responsible AI.

Fairness

Fairness is essential to ensure inclusive AI systems, prevent discrimination, and adhere to ethical and legal standards. A fair AI system fosters trust and avoids systemic biases.

Explainability

Explainability refers to a model’s ability to justify its internal mechanisms and decisions in a manner comprehensible to humans. It is crucial for detecting biases, building trust, and correcting potential misinterpretations.

Privacy and security

Privacy ensures that users retain control over the use of their personal data. Security ensures that this data is protected from unauthorized access, thereby maintaining trust in the system.

Transparency

Transparency involves providing stakeholders with clear information about an AI system’s functioning, limitations, and capabilities. It enables better understanding of decision-making processes, identification of potential biases, and assurance of ethical AI use.

Veracity and robustness

A robust model must operate reliably, even in unexpected conditions or when faced with errors. It must remain accurate and secure amid variations in data or context, ensuring consistent performance.

Governance

AI governance involves a set of processes that define, enforce, and monitor responsible practices within an organization. It ensures respect for rights, laws, and ethical standards, such as intellectual property protection and regulatory compliance.

Security

Safety in responsible AI involves designing systems that minimize risks of bias, errors, and unintended consequences, thereby protecting individuals and society.

Controllability

Controllability refers to the ability to monitor and adjust an AI system’s behavior to remain aligned with human values and intentions. A controllable model facilitates the management of deviations and contributes to transparency and fairness.

Conclusion

As AI tools like Grok illustrate the potential pitfalls of deploying artificial intelligence without safeguards, it is more crucial than ever to define an ethical and responsible framework for this technology. Responsible AI must not be a mere slogan but a proactive approach that incorporates transparency, inclusion, and consideration of social impacts. Initiatives such as the Canadian Senate’s proposed bill to regulate AI use in public administrations to ensure transparency and fairness offer a concrete example of a response to these challenges.

The challenge remains immense: how can we design AI that amplifies opportunities while limiting risks? The answers will lie in increased collaboration between businesses, researchers, governments, and civil society.